About Chalmers University of Technology

About University of Tokyo

About Chalmers

About Inceptio

About Sensetime

About SCNU About JIMI

About Sensetime

Hi! I'm Hongguang Chen, a PhD student at Chalmers University of Technology, focusing on High-Performance Computing. I have a strong background in AI, robotics, and computer vision, with hands-on experience in both academia and industry. I enjoy building systems that bridge hardware and software, and I am passionate about sharing knowledge through open-source projects and education.

A minimal GPT-like model implementation using pure C++, reproducing the TinyStories-33M structure. Features a custom tensor library (MTB), neural network framework (MNN), and GPT-Neo style blocks (GNEO) with caching mechanism.

A comprehensive compiler for a toy language combining C and Java features. Includes BNFC-generated frontend, multi-stage type checker, and LLVM backend with support for classes, structs, enums, arrays, and runtime polymorphism.

A real-time GPU-accelerated smoke simulator using CUDA, implementing grid-based semi-Lagrangian method with linear interpolation. Features ray marching rendering with self-shadowing and voxelization support, achieving 30fps at 50³ grid resolution.

A comprehensive particle-based physics simulation system supporting fluid dynamics, cloth simulation, and interactive particle systems. Features real-time rendering and interactive controls for dynamic scene manipulation.

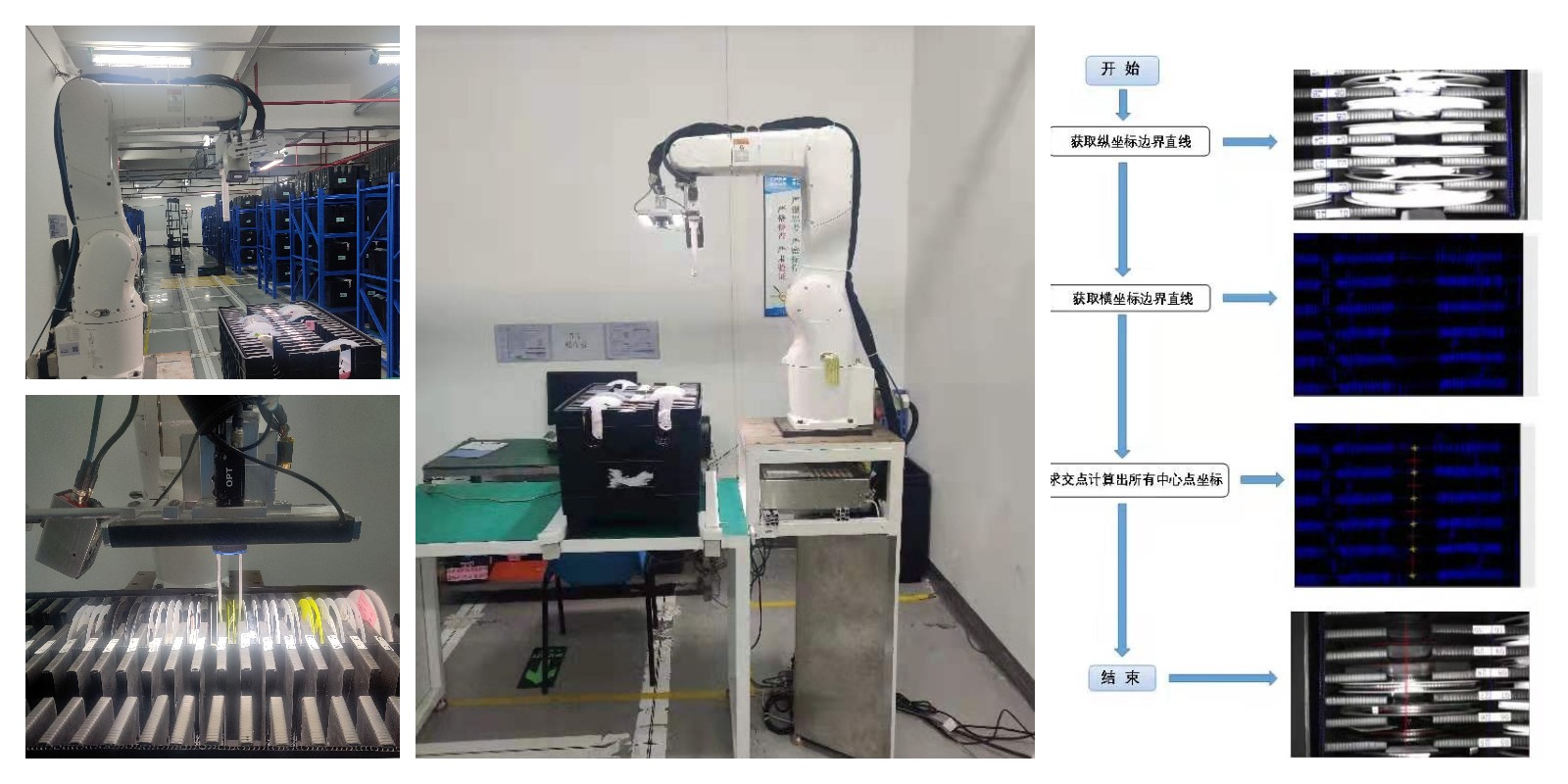

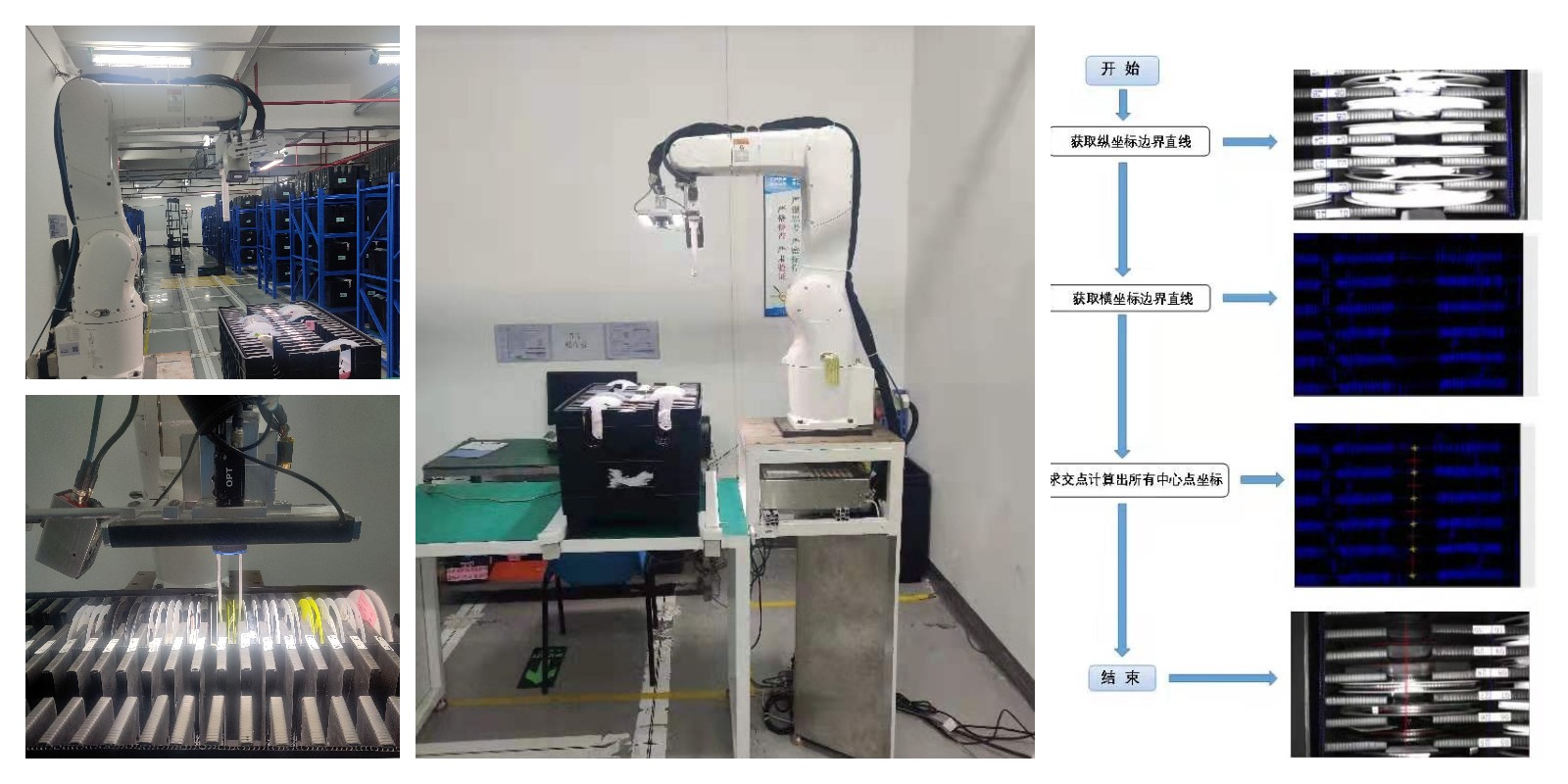

A collection of robotics and automation projects including RoverX autonomous robot with advanced navigation and AI capabilities, Ranger search & rescue robot for hazardous environments, and industrial automation systems for manufacturing processes.

We propose AMST, a novel multimodal training strategy that adaptively skips updates across modalities to balance learning dynamics. AMST improves stability, reduces unnecessary computation, and achieves state-of-the-art efficiency on multiple benchmarks. This repository provides the official implementation and refactored, clean codebase.

View on GitHub Read Pre-print